MicroK8s is a Kubernetes distribution from Canonical. It runs on Ubuntu and is advertised as a lightweight Kubernetes distribution, offering high availability and automatic updates.

Today I going to setup a single node MicroK8s cluster and leverage OpenEBS storage for dynamic allocation.

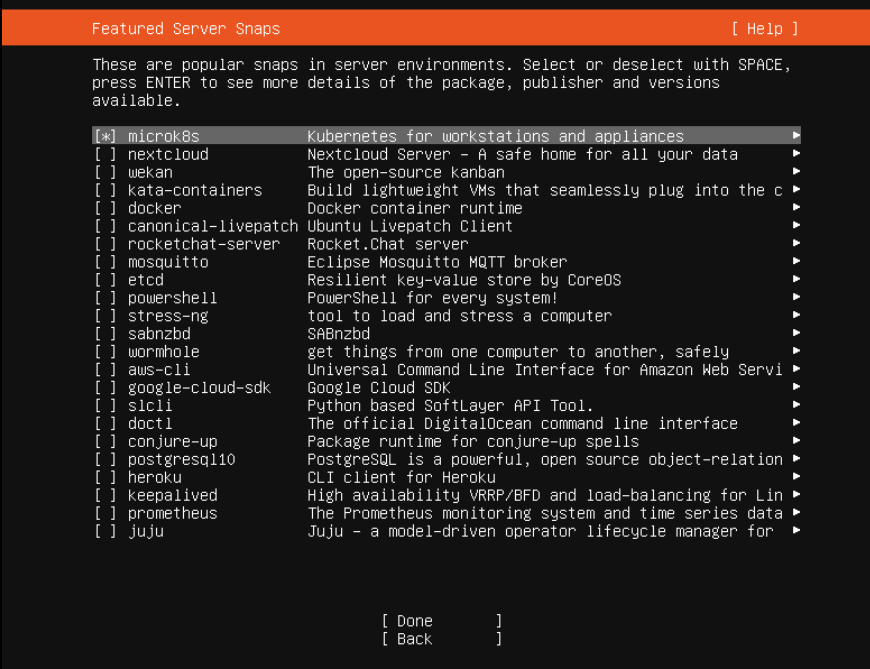

You can choose to install MicroK8S during the OS ubuntu as one of the extra packages install options or simply run the snap command on an existing system:

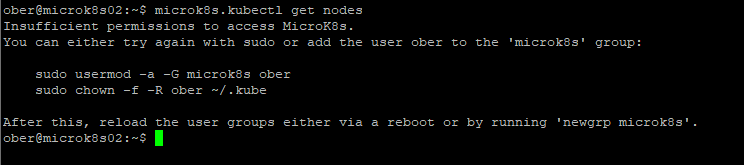

sudo snap install microk8s --classicMicroK8S comes with kubectl baked in but by default it is in this form microK8S.kubectl. When I first run it I get some instructions about my permissions:

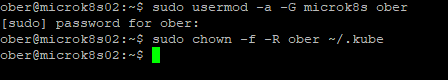

I will run both commands and try again

sudo usermod -a -G microk8s $USERsudo chown -f -R $USER ~/.kube

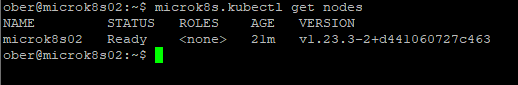

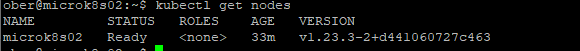

After running newgrp microk8s I can see my node

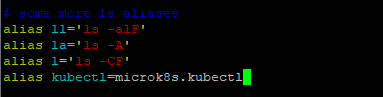

Typing microk8s.kubectl is going to be a nuisance so I will create and alias in my .bashrc file

Then you can either logout login again or run exec bash

Now I should be able to use kubectl:

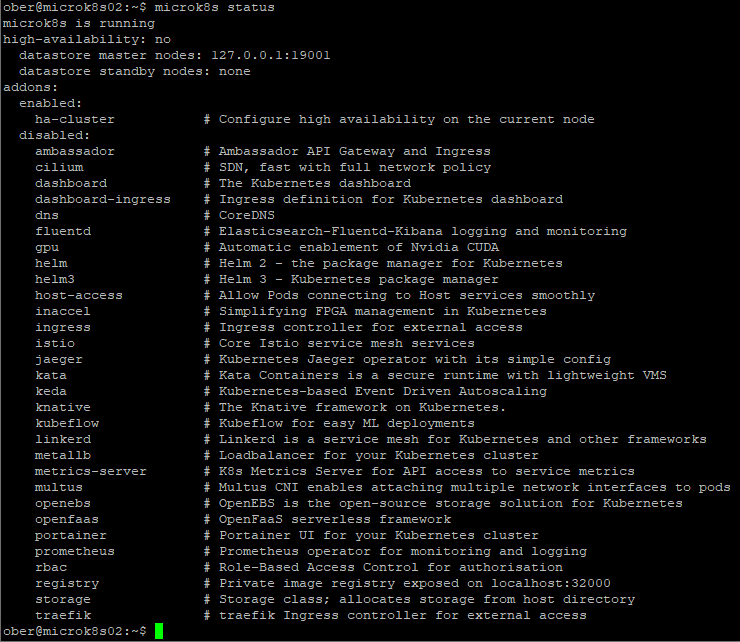

Now that my microK8S install is running I can check the status which among other things will show me what addons are available.

microk8s status

I am going to enable the following addons for my setup:

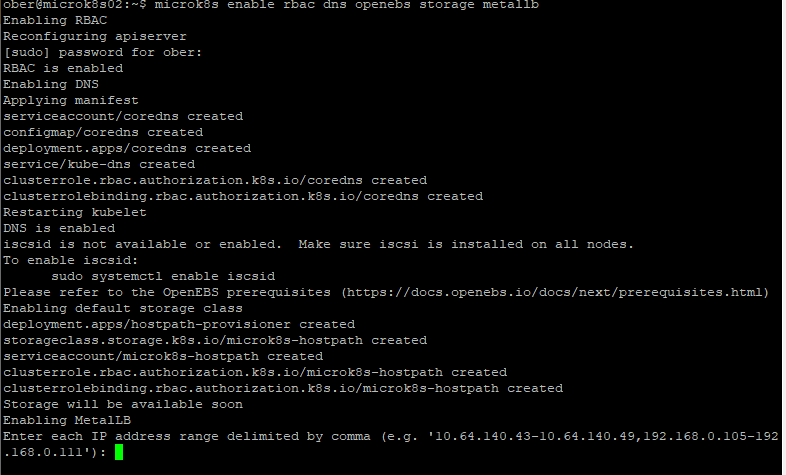

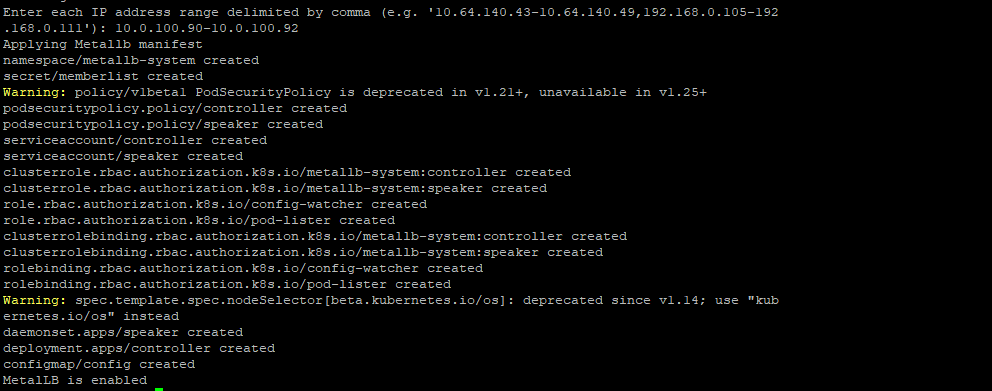

microk8s enable rbac dns openebs storage metallb

I will need to provide a pool of IP addresses for my Metallb

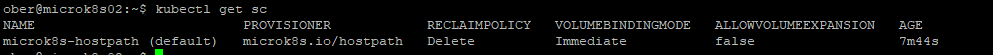

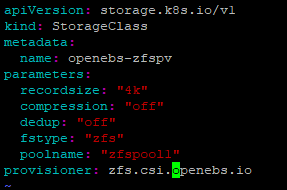

By default microK8S comes with its own hostpath storageclass

However I want to use OpenEBS ZFS since that also has volumesnapshots that can be leveraged by Kasten by Veeam.

I need to install zfsutils beforehand.

sudo apt install zfsutils-linux

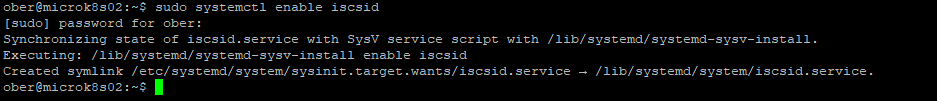

I will also need to enable iscsid in Ubuntu

sudo systemctl enable iscsid

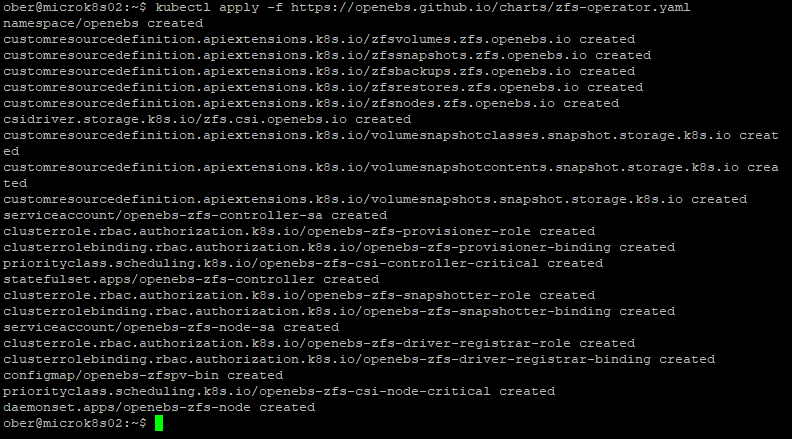

I am going use OpenEBS’s zfs operator and will apply the manifest direct from their github repository

kubectl apply -f https://openebs.github.io/charts/zfs-operator.yaml

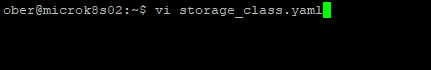

Next I need to create a new storageclass. Later on I will create a poolname called zfspool1 and it is included in the class manifest in the second to last line.

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: openebs-zfspv

parameters:

recordsize: "4k"

compression: "off"

dedup: "off"

fstype: "zfs"

poolname: "zfspool1"

provisioner: zfs.csi.openebs.ioSave that and apply the manifest

kubectl apply -f storage_class.yaml

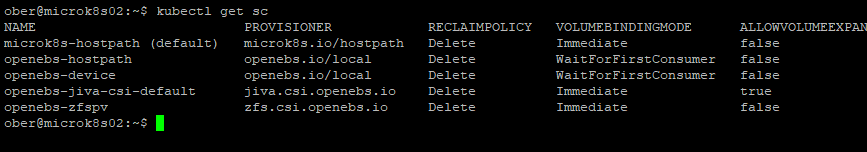

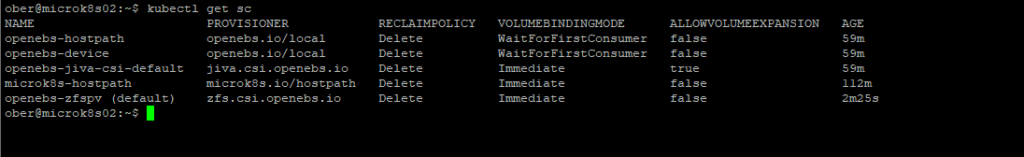

We want to make our openebs-zfspv storageclass the default storage class with these two commands:

kubectl patch storageclass microk8s-hostpath -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"false"}}}'kubectl patch storageclass openebs-zfspv -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'

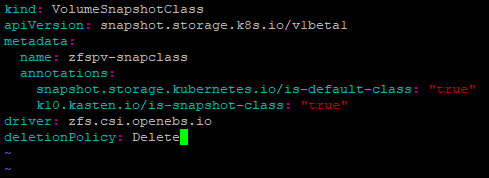

Next I want to create a new ZFS snapshotclass for Openebs using the zfs csi openebs driver.

vi snapclass.yaml

kind: VolumeSnapshotClass

apiVersion: snapshot.storage.k8s.io/v1beta1

metadata:

name: zfspv-snapclass

annotations:

snapshot.storage.kubernetes.io/is-default-class: "true"

k10.kasten.io/is-snapshot-class: "true"

driver: zfs.csi.openebs.io

deletionPolicy: Delete

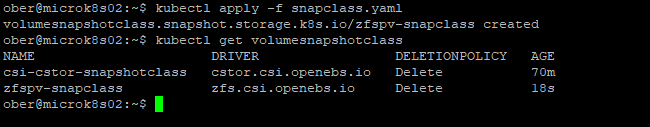

Apply the manifest:

kubectl apply -f snapclass.yaml

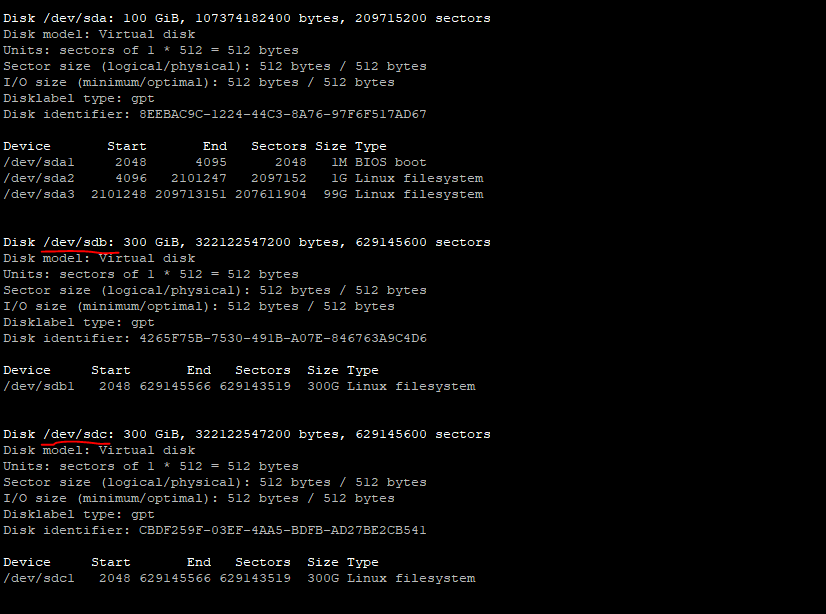

Before I can start using this storage I need to create a ZFS storage pool

I have 2 drives that I want to use /dev/sdb and /dev/sdc

sudo fdisk -l

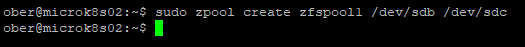

I will create the pool with this command:

sudo zpool create zfspool1 /dev/sdb /dev/sdc

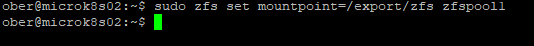

I then need to set a mountpoint:

sudo zfs set mountpoint=/export/zfs zfspool1

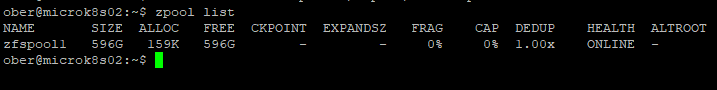

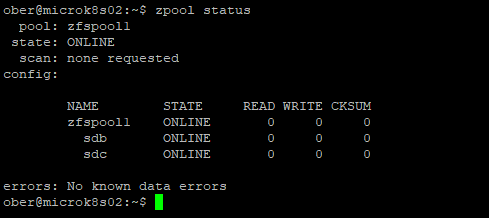

I can check the results with these command: zpool list

and zpool status

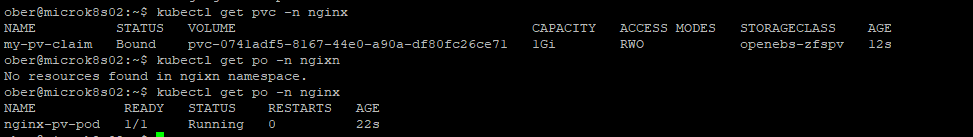

I will now create a simple nginx pod with a persistent volume claim that should be automatically allocated storage from my zfspool.

I am going to create an nginx namespace and deploy the pod with pvc there:

kubectl create ns nginx.

Next I will deploy this manifest: vi nginx.yaml

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: my-pv-claim

namespace: nginx

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

---

kind: Pod

apiVersion: v1

metadata:

name: nginx-pv-pod

namespace: nginx

spec:

volumes:

- name: my-pv-storage

persistentVolumeClaim:

claimName: my-pv-claim

containers:

- name: nginx-pv-container

image: nginx

ports:

- containerPort: 80

name: "http-server"

volumeMounts:

- mountPath: "/usr/share/nginx/html"

name: my-pv-storagekubectl create -f nginx.yaml

Great I have a pod running with storage on openebs-zfs

One of the Veeam Engineers in Belgium Timothy Dewin has great github repo with a Ubuntu Kasten lab creation script that helped me when setting this up. He also has some other great content there so I highly recommend everyone takes a look: https://gist.github.com/tdewin/46d0c5e81481fe91f5c84184cb21e949